Hot on the heels of announcing Nova Forge, a service for training custom Nova AI models, Amazon Web Services (AWS) announced more tools for enterprise customers to create their own frontier models.

AWS announced new capabilities in Amazon Bedrock and Amazon SageMaker AI at its AWS re:Invent conference on Wednesday. These new features are designed to make building and fine-tuning custom large language models (LLMs) easier for developers.

The cloud provider is introducing serverless model fitting in SageMaker, which allows developers to start building a model without having to think about computing resources or infrastructure, according to Ankur Mehrotra, general manager of AI platforms at AWS, in an interview with TechCrunch.

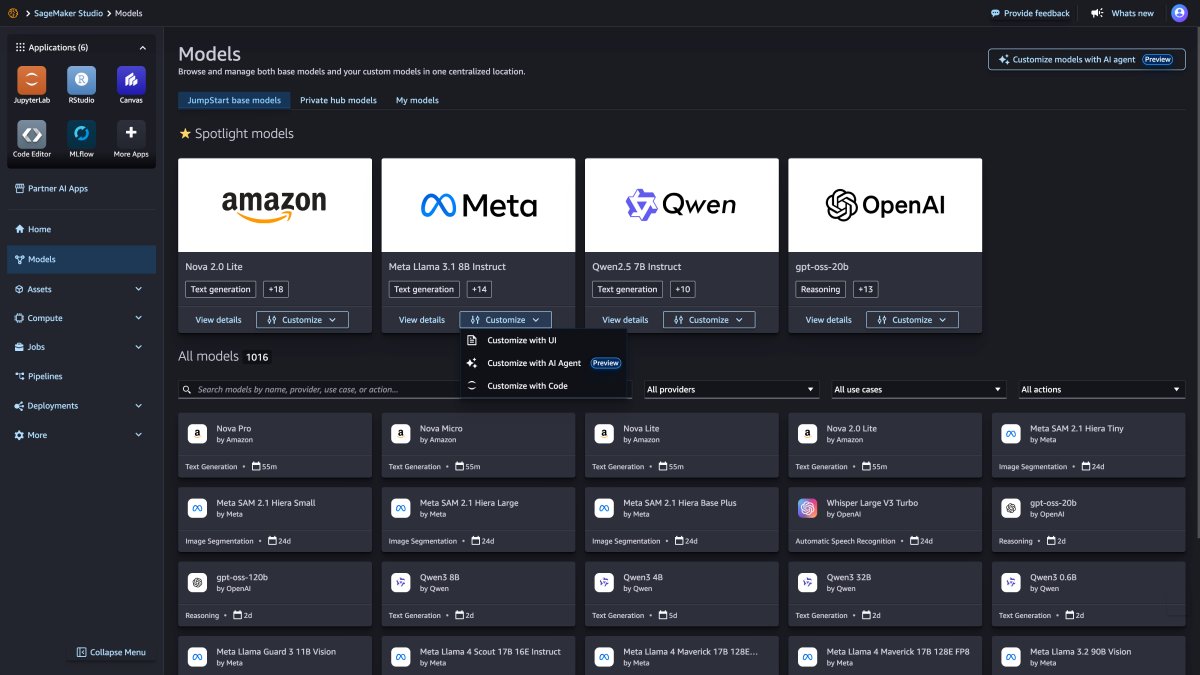

To access these serverless model building capabilities, developers can follow either a self-directed point-and-click path or an agent-driven experience where they can prompt SageMaker using natural language. The agent-led feature launches in preview.

“If you’re a healthcare customer and you wanted a model to be able to better understand certain medical terminologies, you can just point to SageMaker AI, if you’ve labeled data, select the technique and then SageMaker goes and [it] fine-tuning the model,” said Mehrotra.

This feature is available to fit Amazon’s own Nova models and certain open source models (those with publicly available model weights), including DeepSeek and Metas Llama.

AWS is also launching Reinforcement Fine-Tuning in Bedrock, allowing developers to choose either a reward function or a preset workflow, and Bedrock will run a model-fitting process automatically from start to finish.

Techcrunch event

San Francisco

|

13.-15. October 2026

Frontier LLMs – meaning the most advanced AI models – and model fitting appear to be an area of focus for AWS at this year’s conference.

AWS announced Nova Forge, a service where AWS will build custom Nova models for its enterprise customers for $100,000 a year, during AWS CEO Matt Garman’s keynote on Tuesday.

“Many of our customers ask, ‘If my competitor has access to the same model, how do I differentiate myself?'” Mehrotra said. “’How do I build unique solutions that are optimized, that optimize my brand, for my data, for my use case, and how do I differentiate myself?’ What we’ve found is that the key to solving that problem is being able to create custom models.”

AWS has yet to gain a significant user base for its AI models. A survey by Menlo Ventures in July found that companies strongly prefer Anthropic, OpenAI and Gemini over other models. But the ability to customize and fine-tune these LLMs could begin to give AWS a competitive advantage.

Catch up on all of TechCrunch’s coverage of the annual enterprise tech event here, and see any announcements you may have missed so far here.