A growing number of browsers are experimenting with agent features that will perform actions on your behalf, such as reserving tickets or shopping for various items. However, these agent features also come with security risks that can lead to loss of data or money.

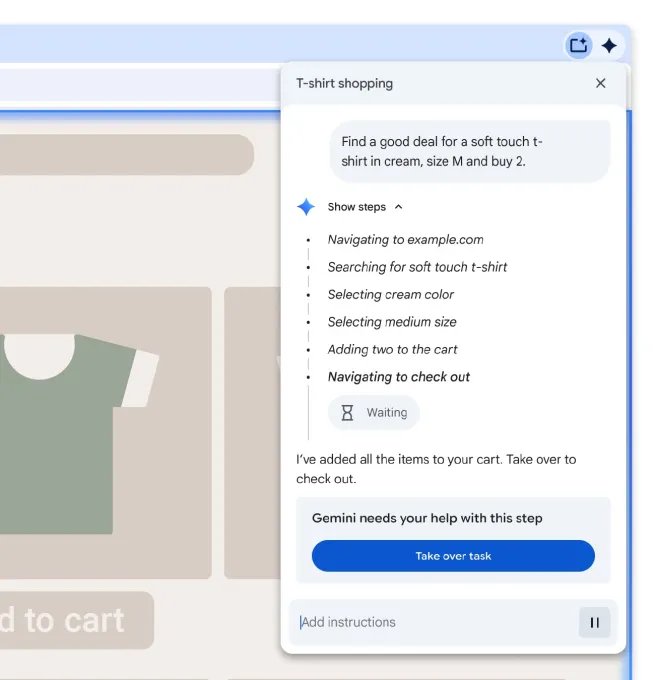

Google detailed its approach to handling user security on Chrome using observer models and user action consent. The company previewed agent features on Chrome in September and said those features will roll out in the coming months.

The company said it uses the help of a few models to keep agent actions in check. Google said it built a User Alignment Critic using Gemini to scrutinize the action points built by the planner model for a particular task. If the critic model believes that the planned tasks do not serve the user’s goals, it asks the planner model to reconsider the strategy. Google noted that the critic model only sees the metadata of the proposed action and not the actual web content.

What’s more, to prevent agents from accessing unauthorized or untrusted sites, Google uses Agent Origin Sets, which restrict the model to access read-only origins and read-writable origins. Read-only origin is data from which Gemini is authorized to consume content. For example, on a shopping site, listings are relevant to the task, but banner ads are not. Similarly, Google said that the agent may only click or type on certain iframes on a page.

“This delimitation enforces that only data from a limited set of origins is available to the agent, and that data can only be passed to the writable origins. This limits the threat vector for cross-origin data leaks. This also allows the browser to enforce some of this separation, such as by not even passing data to the model that is outside the readable set,” the company said in a blog post.

Google also keeps a check on page navigation by examining URLs through another observer model. This can prevent navigation to malicious model-generated URLs, the company said.

The search giant said it also leaves the reins to users for sensitive tasks. For example, when an agent tries to navigate to a sensitive website with information like banking or your medical data, it first asks the user. For sites that require login, it will ask the user for permission to let Chrome use the password manager. Google said the agent model has no exposure to password data. The company added that it will ask users before taking actions such as making a purchase or sending a message.

Techcrunch event

San Francisco

|

13.-15. October 2026

Google said that in addition to this, it also has a prompt-injection classifier to prevent unwanted actions and also tests agent properties against attacks created by researchers.

AI browser makers also pay attention to security. Earlier this month, Perplexity released a new open source content detection model to prevent rapid injection attacks against agents.